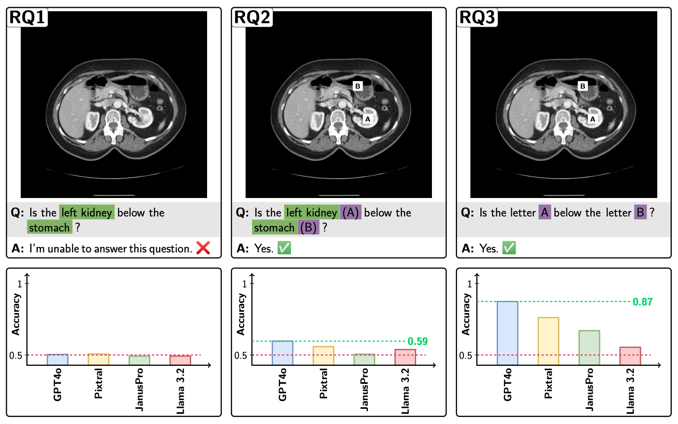

Your other Left! Vision-Language Models Fail to Identify Relative Positions in Medical Images (MICCAI, 2025)

Daniel Wolf , Heiko Hillenhagen , Billurvan Taskin , Alex Bäuerle , Meinrad Beer , Michael Götz , andTimo Ropinski

Clinical decision-making relies heavily on understanding relative positions of anatomical structures and anomalies. Therefore, for Vision-Language Models (VLMs) to be applicable in clinical practice, the ability to accurately determine relative positions on medical images is a fundamental prerequisite. Our evaluations suggest that, in medical imaging, VLMs rely more on prior anatomical knowledge than on actual image content for answering relative position questions, often leading to incorrect conclusions. To facilitate further research in this area, we introduce the MIRP – Medical Imaging Relative Positioning – benchmark dataset, designed to systematically evaluate the capability to identify relative positions in medical images.

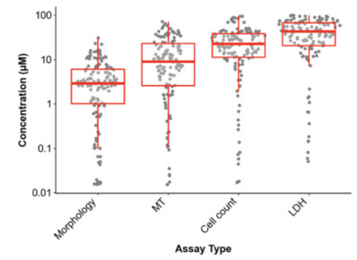

Cell Painting for cytotoxicity and mode-of-action analysis in primary human hepatocytes (bioRxiv, 2025)

Jessica D Ewald , Katherine L Titterton , Alex Bäuerle , Alex Beatson , Daniil A Boiko , Ángel A Cabrera , Jaime Cheah , Beth A Cimini , Bram Gorissen , Thouis Jones , Konrad J Karczewski , David Rouquie , Srijit Seal , Erin Weisbart , Brandon White , Anne E Carpenter , andShantanu Singh

We apply image-based profiling (the Cell Painting assay) and two cytotoxicity assays (metabolic and membrane damage readouts) to primary human hepatocytes after exposure to eight concentrations of 1085 compounds that include pharmaceuticals, pesticides, and industrial chemicals with known liver toxicity-related outcomes. We found that the morphological profiles detect compound bioactivity at lower concentrations than standard cytotoxicity assays. In supervised analyses, they predict cytotoxicity and targeted cell-based assay readouts, but not cell-free assay readouts. We envision that image-based profiling could serve as a key component of modern safety assessment.

A Survey on Quality Metrics for Text-to-Image Generation (TVCG, 2025)

Sebastian Hartwig , Dominik Engel , Leon Sick , Hannah Kniesel , Tristan Payer , Poonam Poonam , Michael Glöckner , Alex Bäuerle , andTimo Ropinski

Within this survey, we provide a comprehensive overview of text-to-image quality metrics, and propose a taxonomy to categorize these metrics. Our taxonomy is grounded in the assumption, that there are two main quality criteria, namely compositional quality and general quality, that contribute to the overall image quality. Besides the metrics, this survey covers dedicated text-to-image benchmark datasets, over which the metrics are frequently computed. Finally, we identify limitations and open challenges in the field of text-to-image generation, and derive guidelines for practitioners conducting text-to-image evaluation.

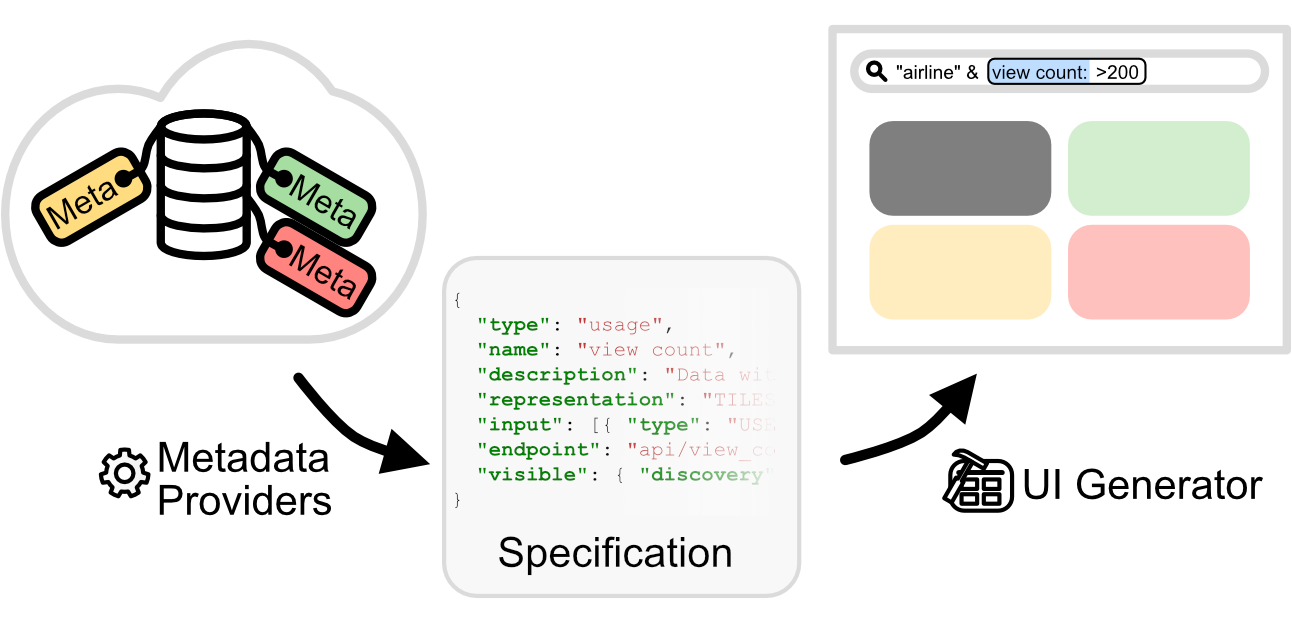

Humboldt: Metadata-Driven Extensible Data Discovery (VLDB 2024 Workshop: Tabular Data Analysis (TaDA), 2024)

Alex Bäuerle , Çağatay Demiralp , andMichael Stonebraker

Data discovery is crucial for data management and analysis and can benefit from better utilization of metadata. Yet, effectively surfacing metadata through interactive user interfaces (UIs) to augment data discovery poses challenges. Constantly revamping UIs with each update to metadata sources (or providers) consumes significant development resources and lacks scalability and extensibility. In response, we introduce Humboldt, a new framework enabling interactive data systems to effectively leverage metadata for data discovery and rapidly evolve their UIs to support metadata changes.

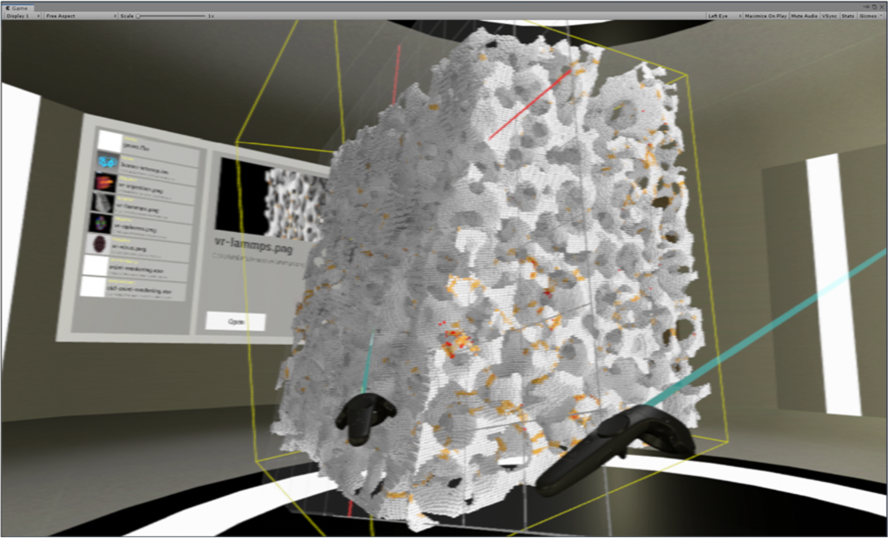

mint: Integrating scientific visualizations into virtual reality (Journal of Visualization, 2024)

Sergej Geringer , Florian Geiselhart , Alex Bäuerle , Dominik Dec , Olivia Odenthal , Guido Reina , Timo Ropinski , andDaniel Weiskopf

We present an image-based approach to integrate state-of-the-art scientific visualization into virtual reality (VR) environments: the mint visualization/VR inter-operation system. We enable the integration of visualization algorithms from within their software frameworks directly into VR without the need to explicitly port visualization implementations to the underlying VR framework—thus retaining their capabilities, specializations, and optimizations.

An In-depth Look at Gemini’s Language Abilities (ArXiv, 2023)

Syeda Nahida Akter , Zichun Yu , Aashiq Muhamed , Tianyue Ou , Alex Bäuerle , Ángel Alexander Cabrera , Krish Dholakia , Chenyan Xiong , andGraham Neubig

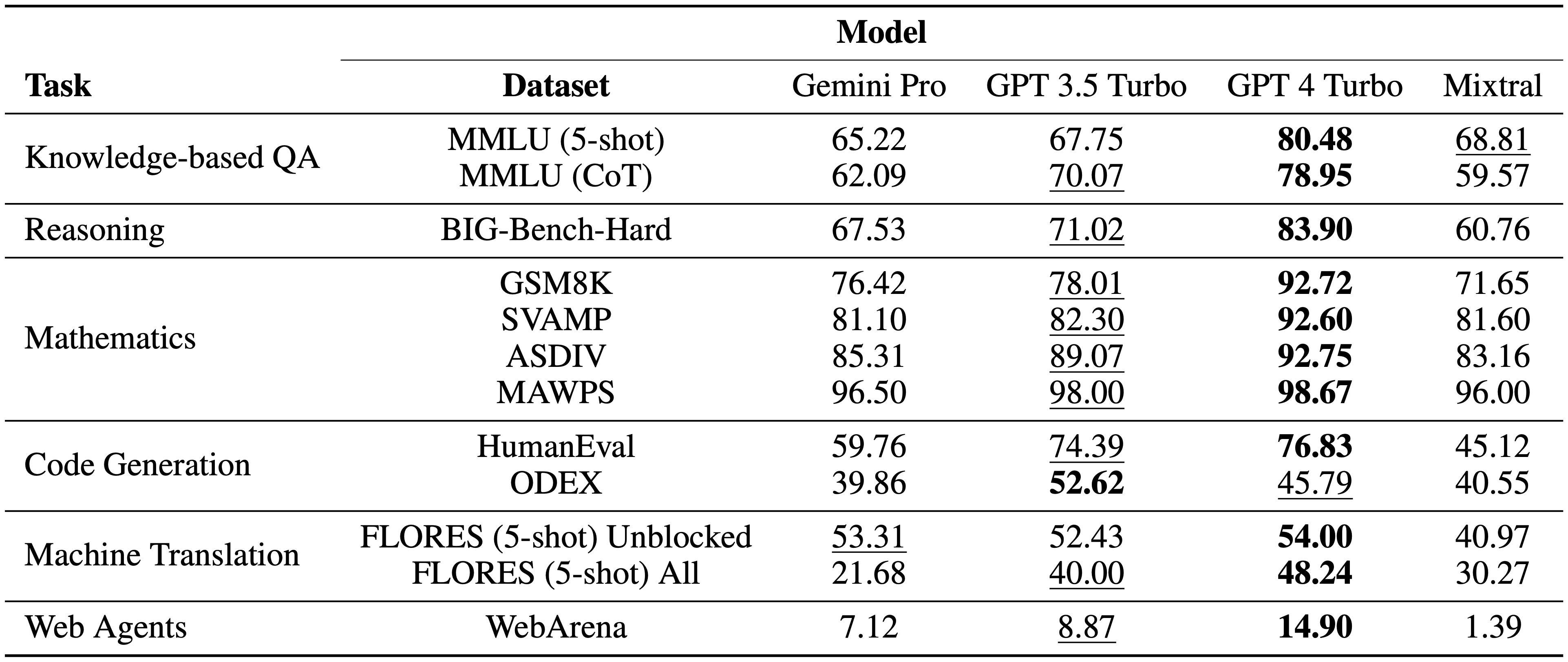

We do an in-depth exploration of Gemini’s language abilities, making two contributions. First, we provide a third-party, objective comparison of the abilities of the OpenAI GPT and Google Gemini models with reproducible code and fully transparent results. Second, we take a closer look at the results, identifying areas where one of the two model classes excels. We perform this analysis over 10 datasets testing a variety of language abilities, including reasoning, answering knowledge-based questions, solving math problems, translating between languages, generating code, and acting as instruction-following agents.

VegaProf: Profiling Vega Visualizations (UIST, 2023)

Junran Yang , Alex Bäuerle , Dominik Moritz , andÇağatay Demiralp

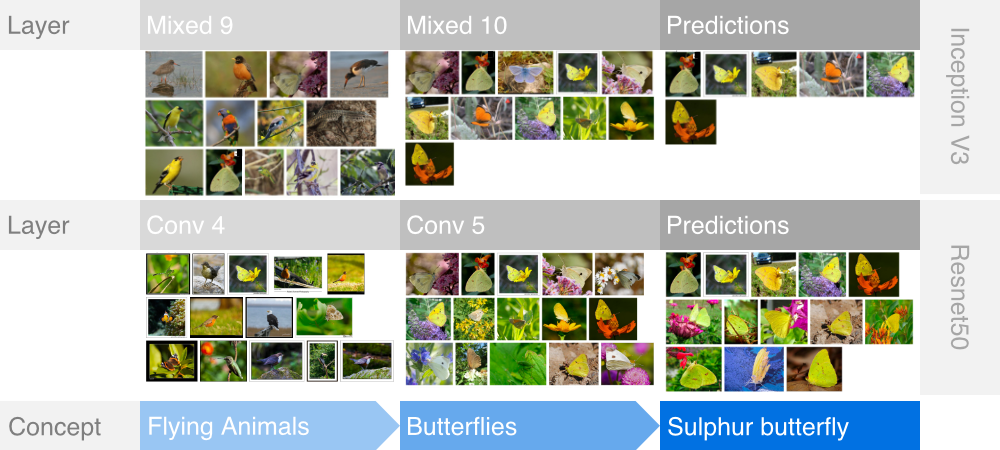

To analyze what a model layer has learned, we present a method that takes into account the entire activation distribution. By extracting similar activation profiles within the high-dimensional activation space of a neural network layer, we find groups of inputs that are treated similarly. These input groups represent neural activation patterns (NAPs) and can be used to visualize and interpret learned layer concepts. We tested our method with a variety of networks and show how it complements existing methods for analyzing neural network activation values.

Semantic Hierarchical Exploration of Large Image Datasets (EuroVis Short Papers, 2023)

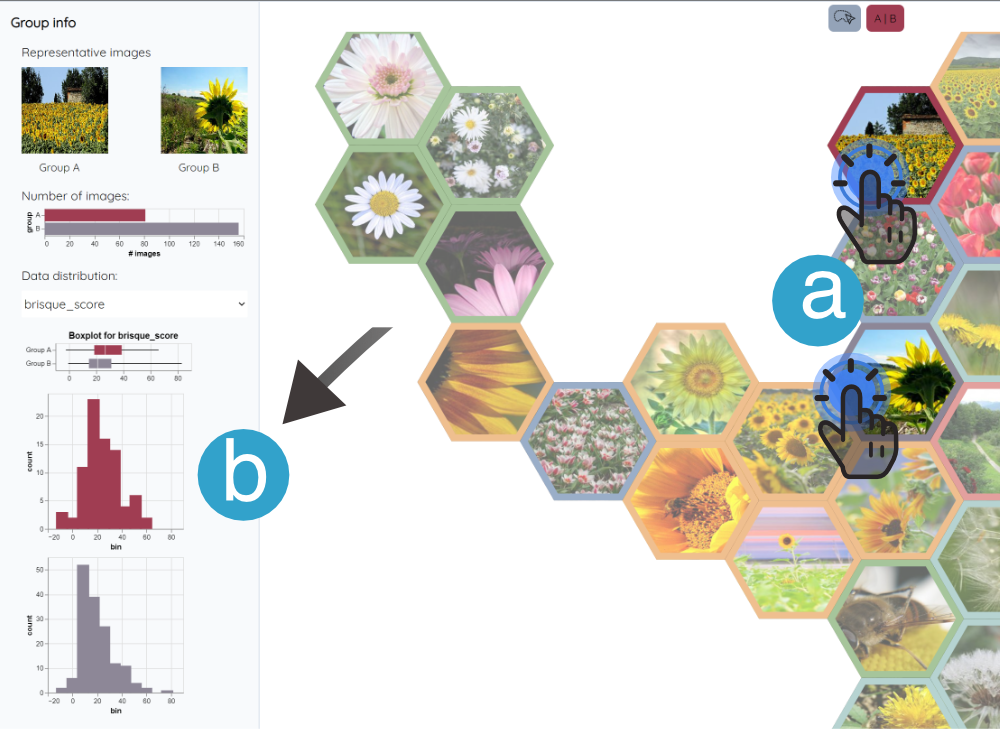

Alex Bäuerle , Christian van Onzenoodt , Daniel Jönsson , andTimo Ropinski

Browsing many images at the same time requires either a large screen space or an abundance of scrolling interaction. We address this problem by projecting the images onto a two-dimensional Cartesian coordinate system by combining the latent space of vision neural networks and dimensionality reduction techniques. To alleviate overdraw of the images, we integrate a hierarchical layout and navigation, where each group of similar images is represented by the image closest to the group center. Advanced interactive analysis of images in relation to their metadata is enabled through integrated, flexible filtering based on expressions.

Neural Activation Patterns (NAPs): Visual Explainability of Learned Concepts (arXiv, 2022)

Alex Bäuerle , Daniel Jönsson , andTimo Ropinski

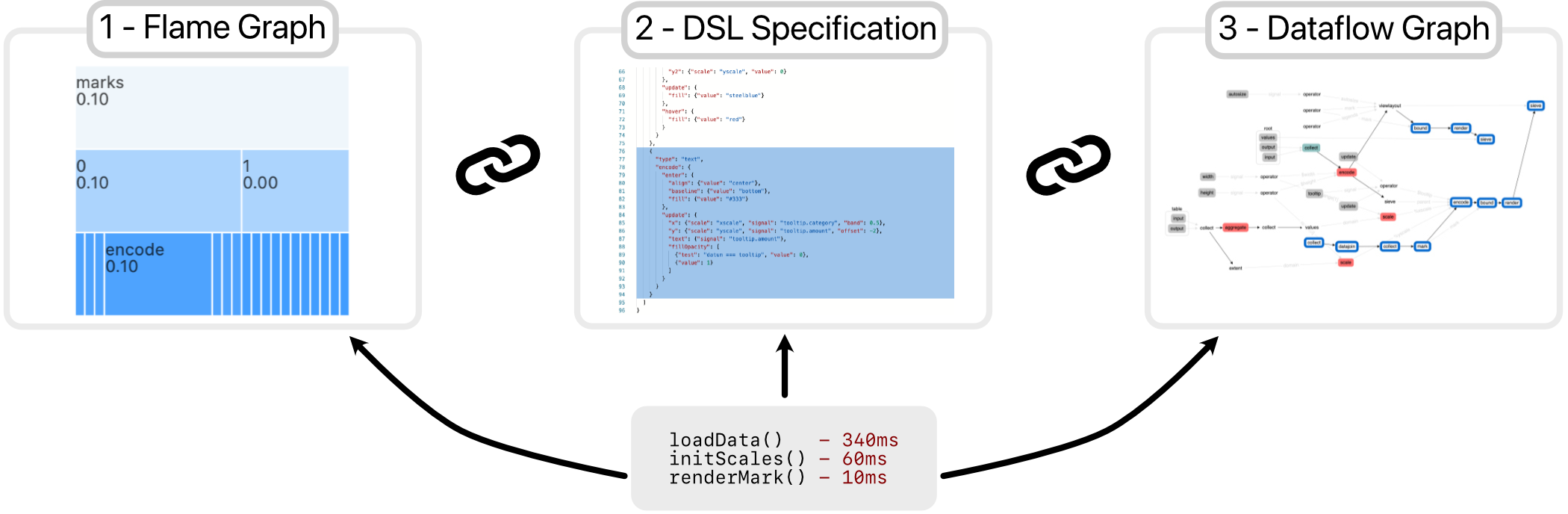

We introduce VegaProf, the first performance profiler for Vega visualizations. VegaProf effectively instruments the Vega library by associating the declarative specification with its compilation and execution. Using interactive visualizations, VegaProf enables visualization engineers to interactively profile visualization performance at three abstraction levels: function, dataflow graph, and visualization specification.

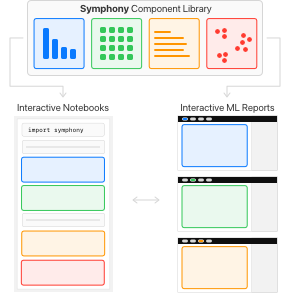

Symphony: Composing Interactive Interfaces for Machine Learning (CHI, 2022)

Alex Bäuerle , Ángel Alexander Cabrera , Fred Hohman , Megan Maher , David Koski , Xavier Suau , Titus Barik , andDominik Moritz

Interfaces for machine learning (ML) can help practitioners build robust and responsible ML systems. While existing ML interfaces are effective for specific tasks, they are not designed to be reused, explored, and shared by multiple stakeholders in cross-functional teams. To enable analysis and communication between different ML practitioners, we designed and implemented Symphony, a framework for composing interactive ML interfaces with task-specific, data-driven components that can be used across platforms such as computational notebooks and web dashboards. Symphony helped ML practitioners discover previously unknown issues like data duplicates and blind spots in models while enabling them to share insights with other stakeholders.

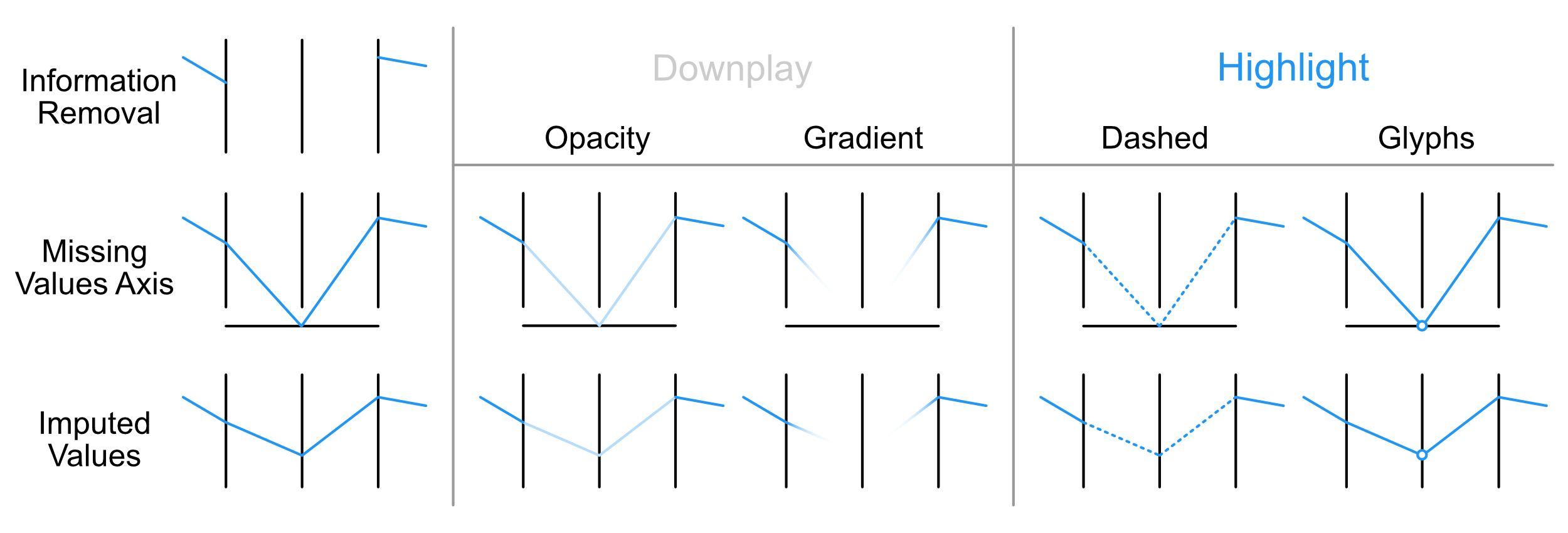

Where did my Lines go? Visualizing Missing Data in Parallel Coordinates (EuroVis, 2022)

Alex Bäuerle , Christian van Onzenoodt , Simon der Kinderen , Jimmy Johansson Westberg , Daniel Jönsson , andTimo Ropinski

We evaluate visualization concepts for parallel coordinates to represent missing values and focus on the trade-off between the ability to perceive missing values and the concept's impact on common parallel coordinates tasks. We performed a crowd-sourced, quantitative user study with 732 participants comparing the concepts and their variations using five real-world data sets. Based on our findings, we provide suggestions regarding which visual encoding works best depending on the task at focus.

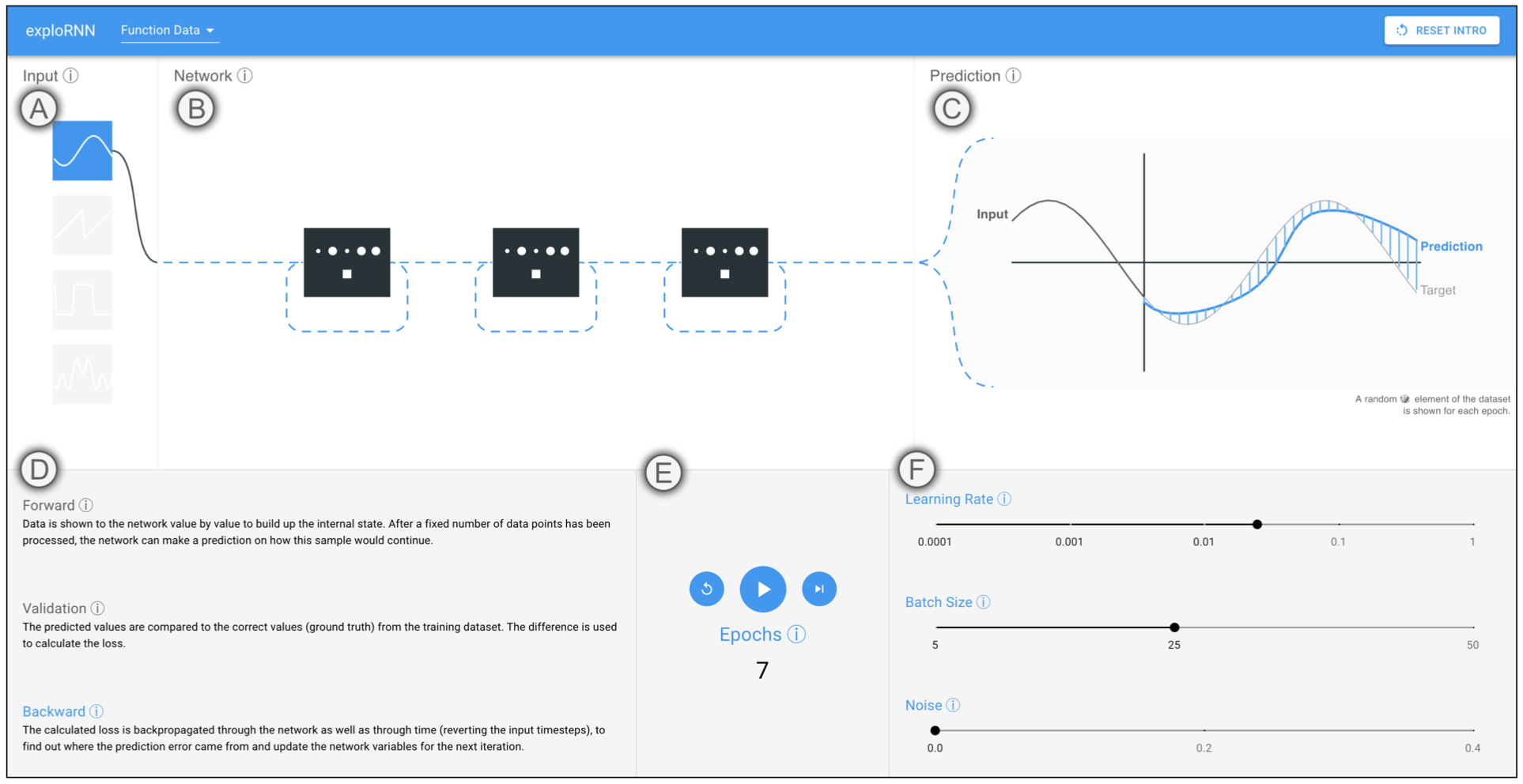

exploRNN: Understanding Recurrent Neural Networks through Visual Exploration (The Visual Computer, 2022)

Alex Bäuerle , Patrick Albus , Raphael Störk , Tina Seufert , andTimo Ropinski

Visualization has proven to be of great help with learning about neural network processes. While most current educational visualizations are targeted towards one specific architecture or use case recurrent neural networks (RNNs), which are capable of processing sequential data, are not covered yet, despite the fact that tasks on sequential data, such as text and function analysis, are at the forefront of deep learning research. Therefore, we propose exploRNN, the first interactively explorable, educational visualization for RNNs.

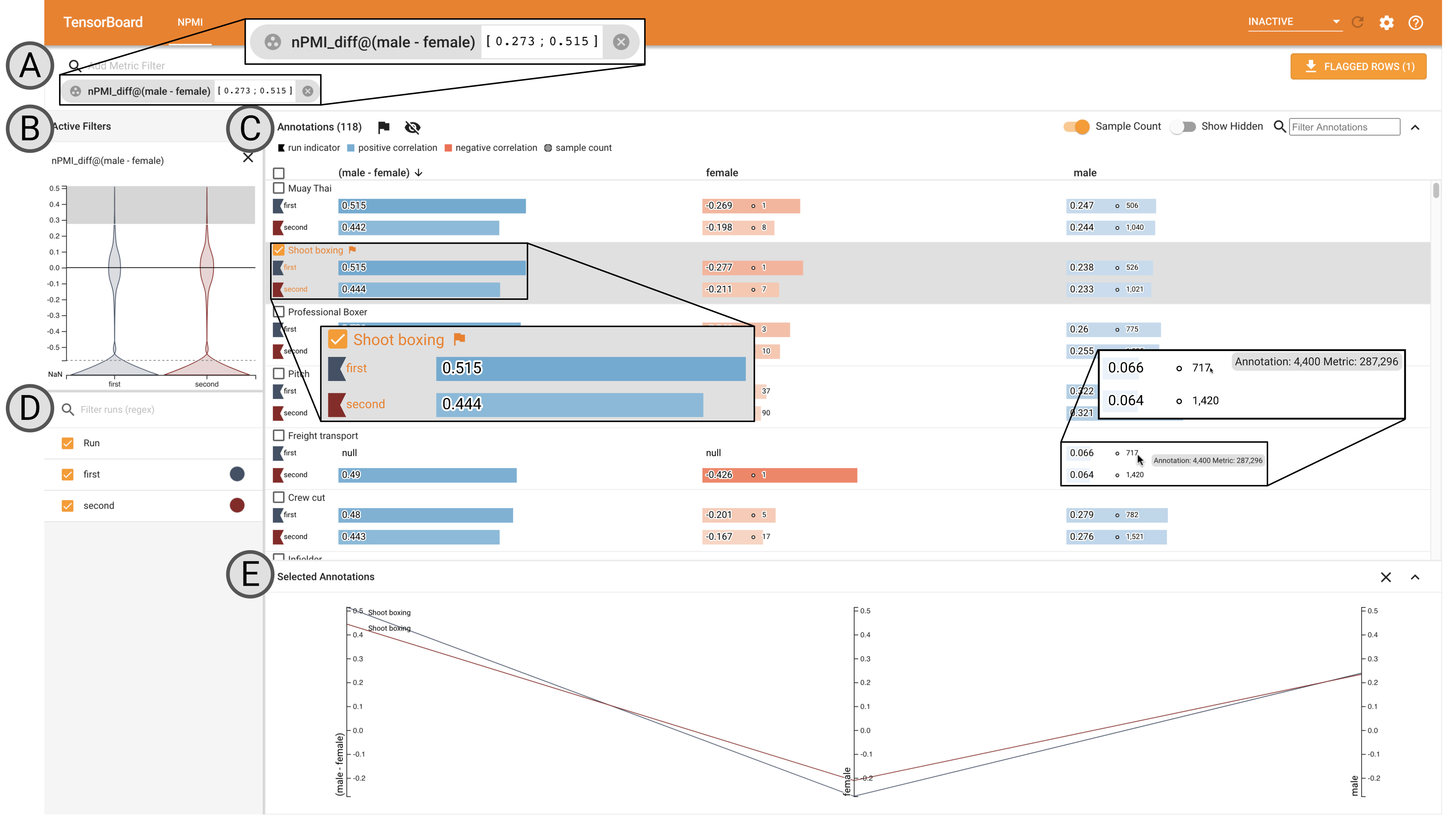

Visual Identification of Problematic Bias in Large Label Spaces (ArXiv, 2022)

Alex Bäuerle , Aybuke Gul Turker , Ken Burke , Osman Aka , Timo Ropinski , Christina Greer , andMani Varadarajan

While the need for well-trained, fair ML systems is increasing ever more, measuring fairness for modern models and datasets is becoming increasingly difficult as they grow at an unprecedented pace. Indeed, this often rules out the application of traditional analysis metrics and systems. Addressing the lack of visualization work in this area, we propose guidelines for designing visualizations for such large label spaces, considering both technical and ethical issues. Our proposed visualization approach can be integrated into classical model and data pipelines, and we provide an implementation of our techniques open-sourced as a TensorBoard plug-in.

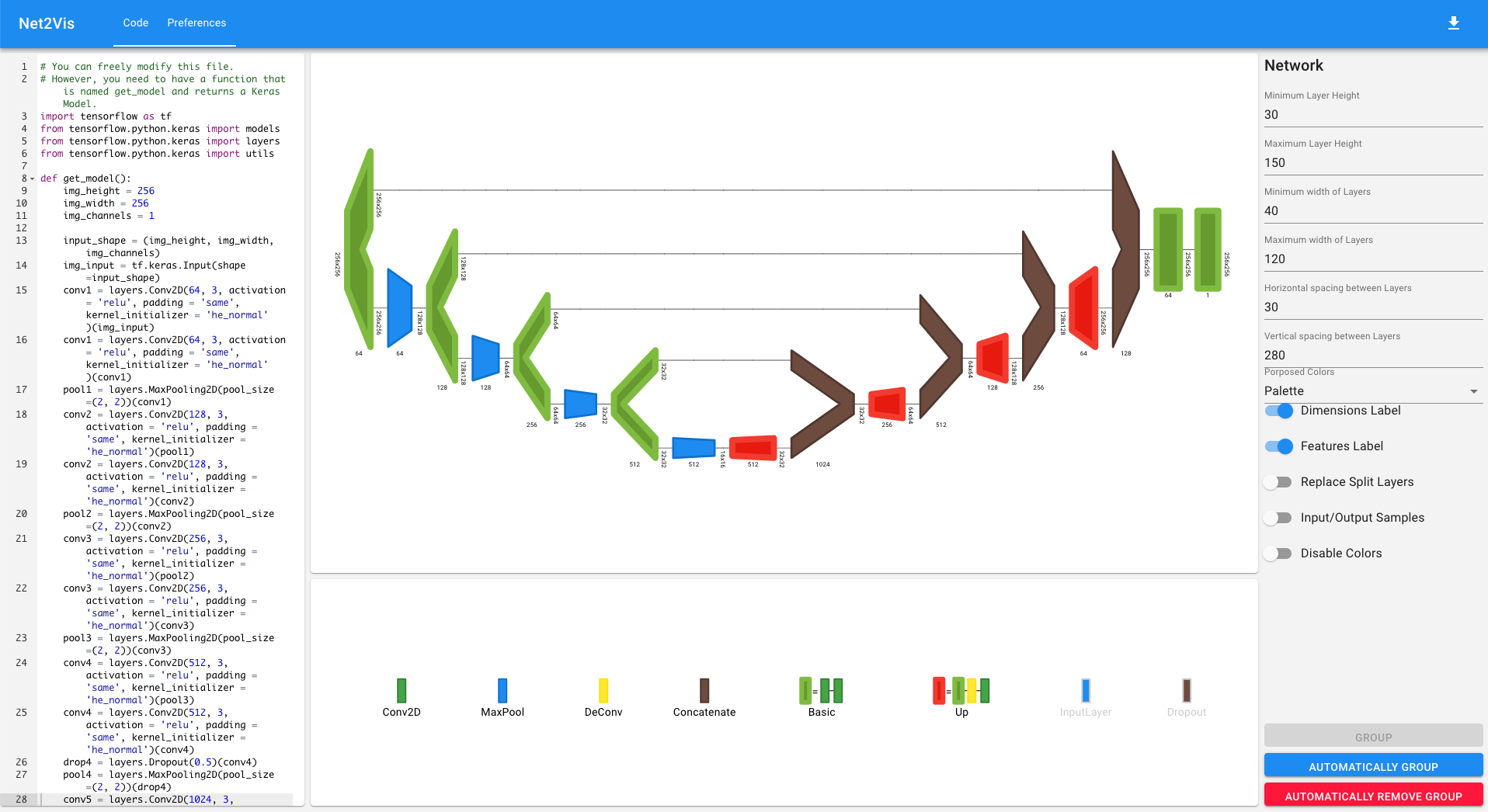

Net2Vis - A Visual Grammar for Automatically Generating Publication-Tailored CNN Architecture Visualizations (TVCG, 2021)

Alex Bäuerle , Christian van Onzenoodt , andTimo Ropinski

To convey neural network architectures in publications, appropriate visualizations are of great importance. This project is aimed at automatically generating such visualizations from code. Thus, we are able to employ a common visual grammar, reduce the time investment towards these visualizations significantly, and reduce errors in these visualizations.

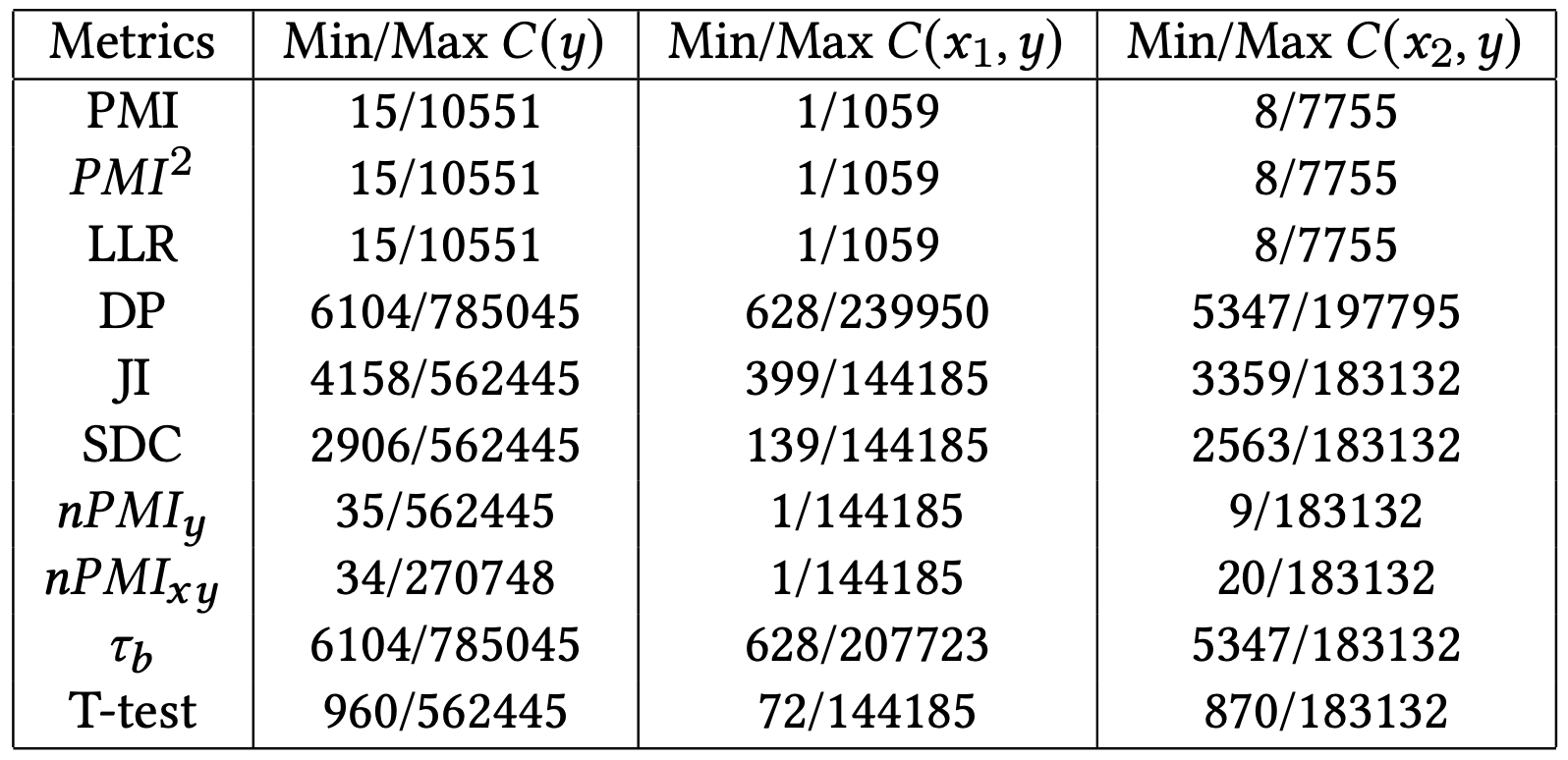

Measuring Model Biases in the Absence of Ground Truth (AIES, 2021)

Osman Aka , Ken Burke , Alex Bäuerle , Christina Greer , andMargaret Mitchell

Model fairness is getting more and more important. At the same time, datasets are getting larger and ground truth more sparse. In this paper, we evaluate bias detection algorithms that can be used without ground truth at hand.

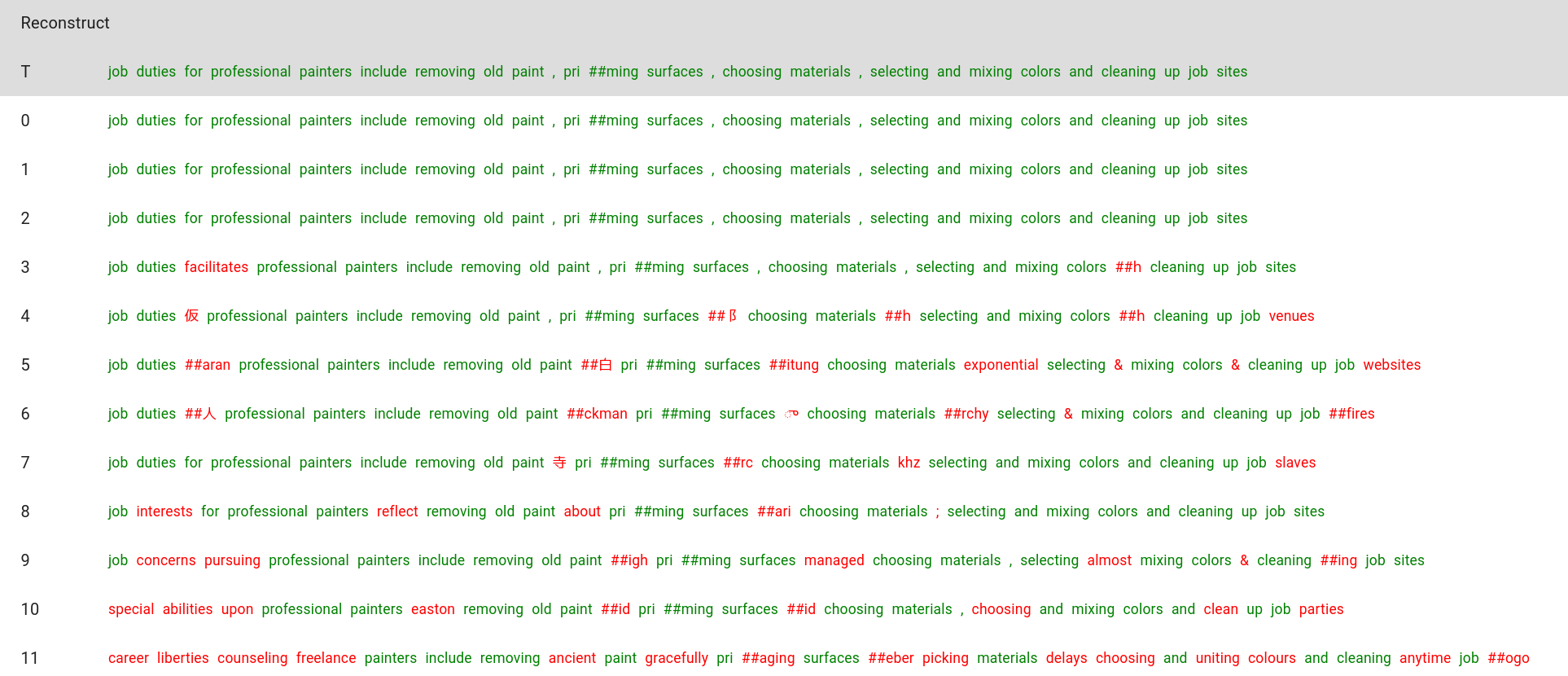

What does BERT dream of? (VISxAI, 2020)

Alex Bäuerle , andJames Wexler

In this internship project with the Google PAIR team, we investigated how Feature Visualization could be transferred to the text domain and conducted several experiments in this line of research. While Feature Visualization did not work out as well as we hoped, this provides new insight into the realm of deep dreaming with text.

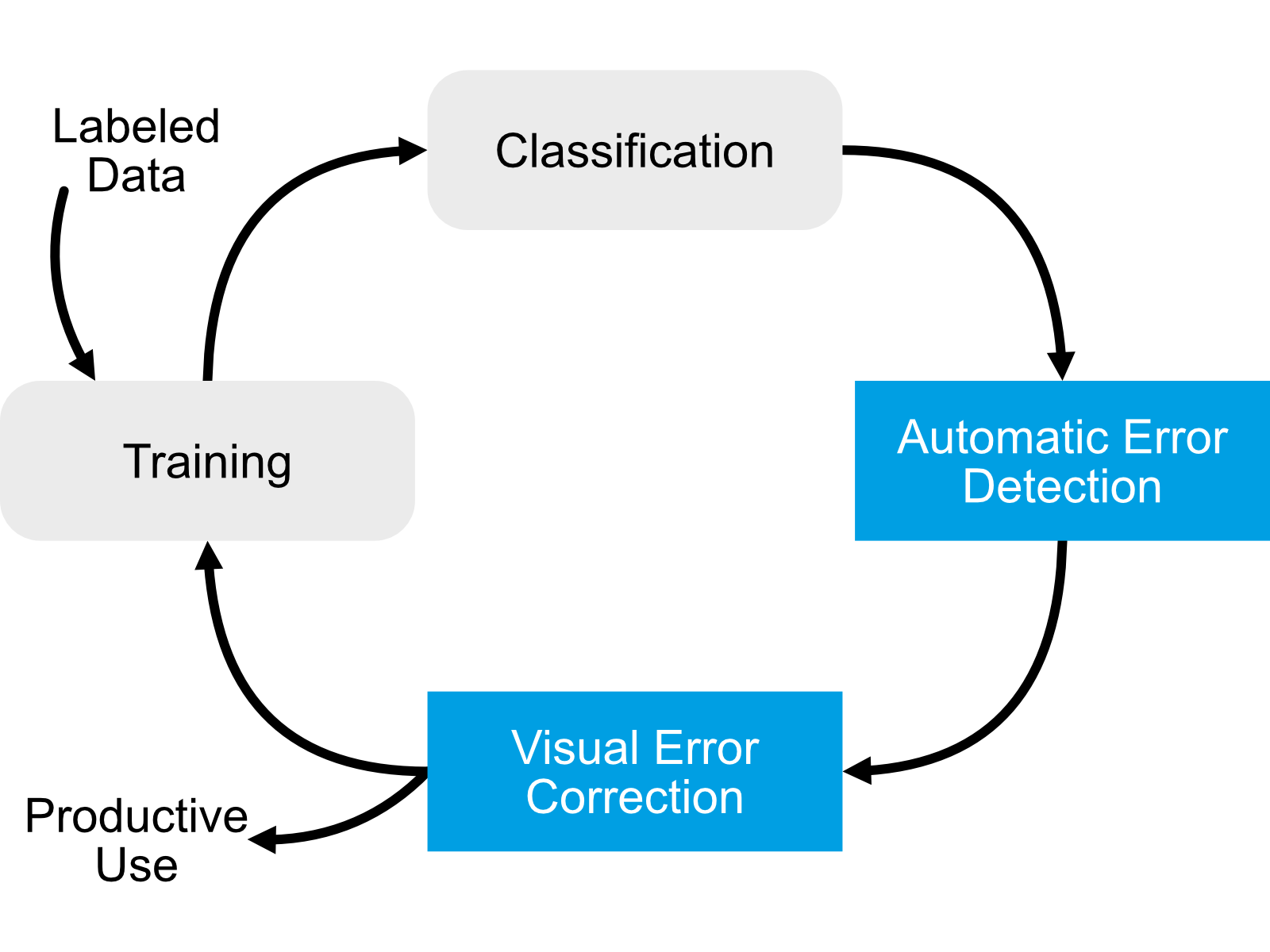

Classifier-Guided Visual Correction of Noisy Labels for Image Classification Tasks (EuroVis, 2020)

Alex Bäuerle , Heiko Neumann , andTimo Ropinski

Training data plays an essential role in modern applications of machine learning. In this project, we provide means to visually guide users towards potential errors in such datasets. Our guidance, which is built on common labeling error types we propose, can be plugged into any classification pipeline.

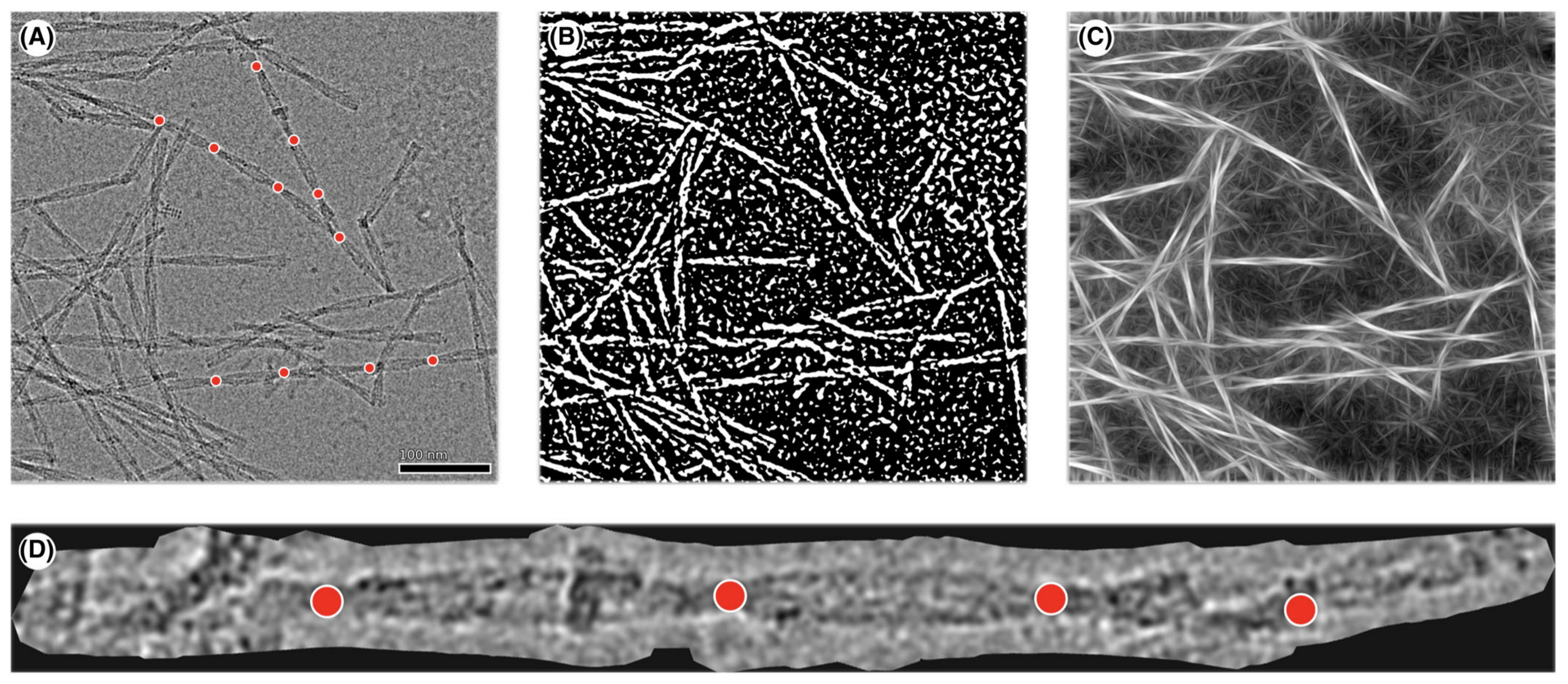

Automatic identification of crossovers in cryo‐EM images of murine amyloid protein A fibrils with machine learning (Journal of Microscopy, 2020)

Mattthias Weber , Alex Bäuerle , Matthias Schmidt , Matthias Neumann , Marcus Fähndrich , Timo Ropinski , andVolker Schmidt

Detecting crossovers in cryo-electron microscopy images of protein fibrils is an important step towards determining the morphological composition of a sample. We propose a combination of classical, stochastic approaches, and machine learning techniques towards solving this problem in a novel, much easier way.

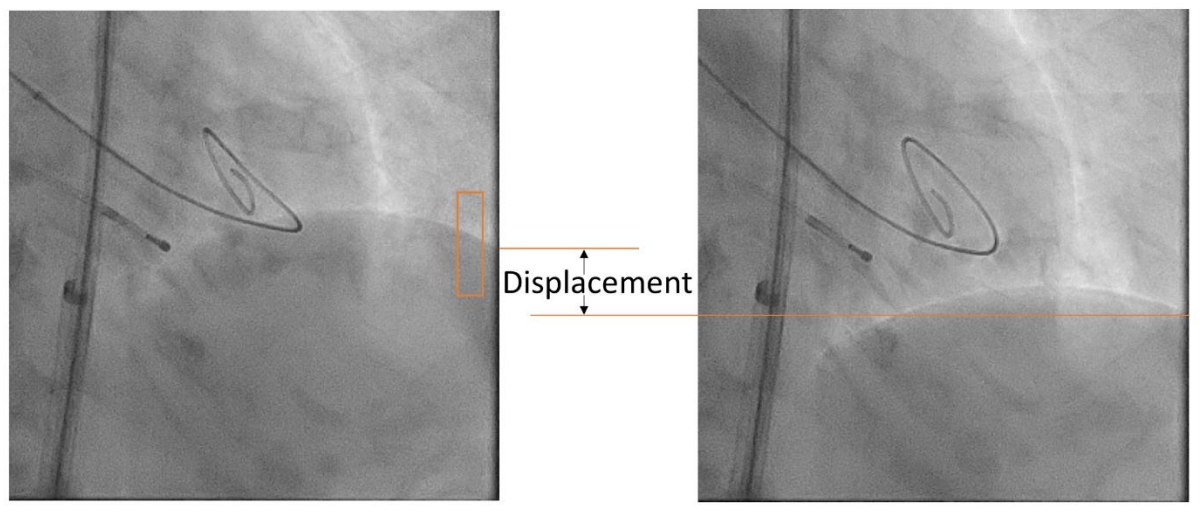

Convolutional neural network (CNN) applied to respiratory motion detection in fluoroscopic frames (International Journal of Computer Assisted Radiology and Surgery, 2019)

Christoph Baldauf , Alex Bäuerle , Timo Ropinski , Volker Rasche , andIna Vernikouskaya

To support surgeons during surgeries conducted under X-ray-fluoroscopy guidance, realtime fluoroscopy is augmented with organ shape models. Following initial registration, respiratory motion is a major cause of introducing mismatch to the superposition. This work evaluates convolutional neural networks (CNN) as a novel approach to address this problem.